As global connectivity ushers in the next era of communication, 6G networks are poised to revolutionize technology with unprecedented capabilities. These advancements will rely heavily on Artificial Intelligence (AI) and Machine Learning (ML), which are expected to enhance network efficiency, facilitate intelligent automation, and support a wide range of use cases. However, the integration of these cutting-edge technologies into 6G networks introduces a new set of security challenges. To address these concerns, the ROBUST-6G project has released its Deliverable 3.1: Threat Assessment and Prevention Report, offering a thorough examination of vulnerabilities and innovative strategies to safeguard AI/ML deployments.

The Role of AI/ML in 6G

AI and ML technologies serve as foundation of 6G networks, driving innovation across multiple domains:

- Dynamic Network Management: AI/ML algorithms can optimize resource allocation dynamically and in real time, enhance spectrum management, and improve fault detection, enabling networks to adapt dynamically to fluctuating demands.

- Personalized User Experiences: Predictive analytics and intelligent decision-making can deliver tailored services, from autonomous vehicles to immersive augmented reality (AR) and virtual reality (VR) applications.

- Enhanced Security: AI-driven tools can enhance threat detection and accelerate response times, safeguarding sensitive data and ensuring secure and reliable communication.

However, achieving this level of efficiency, programmability, and flexibility introduces significant complexity in managing and operating these networks. A key challenge in this transformation is ensuring the protection of AI/ML against cybersecurity risks. Without proactive measures, these threats towards AI/ML could undermine the reliability and trustworthiness of 6G networks.

Challenges of AI/ML in 6G Ecosystems

The unique characteristics of 6G networks—such as high-density connectivity, edge computing, and real-time processing—intensify security and privacy risks for AI/ML. Key challenges include:

Scalability of Threats: The scale and complexity of 6G networks increase the attack surface, making it easier for adversaries to compromise multiple nodes simultaneously. Distributed AI/ML systems are particularly at risk due to their reliance on collaborative data sharing and decentralized training processes.

Real-Time Data Vulnerabilities: The emphasis on real-time data processing in 6G networks amplifies the risk of data manipulation during collection and transmission. Adversaries could exploit these vulnerabilities to disrupt time-sensitive applications, such as autonomous driving or industrial automation.

Heterogeneity in Devices and Data: 6G networks must support a wide range of devices and data sources, from IoT sensors to high-performance computing nodes. This heterogeneity increases the risk of compatibility issues and security loopholes, providing adversaries with more opportunities to exploit weaknesses across different system components.

Comprehensive Threat Assessment: Key Challenges Identified

To ensure the reliability and trustworthiness of AI/ML systems in 6G networks, it is crucial to understand the breadth and depth of potential security threats. Using the STRIDE framework as a foundation, ROBUST-6G Deliverable 3.1 systematically categorizes threats into six primary dimensions: Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service (DoS), and Elevation of Privilege. These categories highlight the multifaceted nature of risks in 6G environments. The following expands on the critical challenges identified in this deliverable:

Adversarial Threats

Adversarial threats pose significant risks to the performance and reliability of AI/ML systems. These attacks exploit the vulnerability of models by manipulating inputs or training data, leading to erroneous or potentially harmful outcomes. The primary types of adversarial threats are:

Poisoning Attacks: Poisoning attacks involve injecting corrupted or malicious data into the training datasets. These attacks are especially dangerous in federated and distributed AI/ML scenarios, where models rely on data contributions from multiple, potentially untrusted sources. For example, in an autonomous vehicle scenario, a poisoning attack might cause the model to misclassify a stop sign, leading to safety-critical failures. Consequently, poisoning attacks can:

- Degrade overall model accuracy, reducing reliability across all use cases.

- Introduce targeted vulnerabilities, such as backdoors, enabling attackers to manipulate specific outcomes.

Evasion Attacks: Evasion attacks occur at the inference stage, where attackers craft inputs are designed to mislead the model without altering its structure. These attacks exploit the sensitivity of AI/ML models to minor perturbations, often producing subtle changes imperceptible to humans but impacting the model; as well as to undermining trust in AI/ML systems, particularly in high-risk environments.

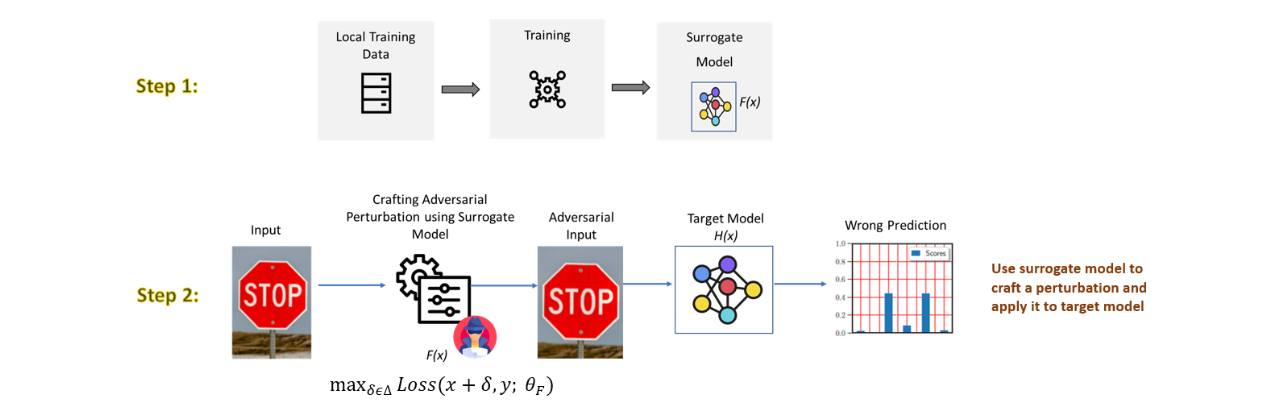

Transferability Attacks: Transferability refers to the capability of adversarial examples generated for one model to successfully deceive another, even when the models differ in architecture or training data. This property enables attackers to bypass security measures by leveraging surrogate models to craft attacks, presenting significant challenges for robust AI/ML systems.

Data Supply Chain Attacks: In data supply chain attacks, adversaries compromise the integrity of data at various stages, including collection, preprocessing, or storage. These attacks are particularly prevalent in dynamic environments, such as 6G networks, where real-time data collection and continuous updates create vulnerabilities that can be exploited. Such attacks can lead to:

- Compromised model performance.

- Undetected vulnerabilities in downstream applications.

Privacy Threats

The integration of AI/ML systems into 6G networks introduces substantial risks to user privacy. These threats primarily target sensitive information embedded in training datasets, exploiting vulnerabilities in how models process, store, and share data. The most pressing privacy threats include:

Model Inversion Attacks: Model inversion attacks enable adversaries to reconstruct input data by exploiting model outputs and internal parameters. For example, in facial recognition systems, attackers can regenerate user images based on model predictions, leading to severe privacy breaches. This risk is significantly amplified in white-box settings, where the attacker has full access to the model’s architecture and weights.

Membership Inference Attacks (MIAs): MIAs aim to determine whether a specific data point was included in a model’s training set. Overfitted models, which exhibit significantly better performance on training data compared to unseen data, are particularly vulnerable to these attacks. These attacks pose severe risks in sectors that handle sensitive data.

Data Reconstruction Attacks: Unlike MIAs, which determine whether a specific data point was included in a training set, reconstruction attacks attempt to rebuild entire datasets by analyzing model gradients or outputs. In federated learning systems, where gradient sharing is a common practice, attackers can use techniques like gradient inversion to extract sensitive information. This threat is especially concerning in applications involving personal data, such as health records or behavioral patterns.

Explainability Threats

Explainable AI (XAI) is vital for fostering trust in AI/ML systems, as it provides transparency into how models make decisions. However, the same mechanisms that enhance interpretability can also introduce unique vulnerabilities, such as:

Fooling Explanations: Adversaries can manipulate explanation outputs to make models appear fair or unbiased, even when they are not. This is particularly harmful in high-stakes applications such as credit scoring or recruitment, where biased decisions can have profound societal impacts.

Exploitation of Transparency: XAI techniques, such as Shapley Additive Explanations (SHAP) or Local Interpretable Model-Agnostic Explanations (LIME), often reveal additional details about model behavior. These insights can be exploited by adversaries to: Reverse-engineer proprietary models, compromising intellectual property and security.

- Reverse-engineer proprietary models, compromising intellectual property and security.

- Optimize adversarial attacks by identifying weak points in the decision-making process.

Trade-offs Between Explainability and Privacy: Efforts to enhance explainability can inadvertently expose sensitive information, creating a delicate balance between transparency and privacy. For instance, detailed model explanations may inadvertently leak proprietary algorithms or disclose user-specific data, increasing the risk of exploitation by adversaries.

Advanced Prevention Strategies

To address the diverse threats facing AI/ML systems in 6G networks, ROBUST-6G Deliverable 3.1 outlines several state-of-the-art prevention and mitigation strategies. These approaches aim to bolster the security, privacy, and robustness of AI/ML deployments, ensuring that 6G networks can withstand evolving cyber threats. Below, we delve into these strategies in greater detail:

Adversarial Training:

By training models with adversarial samples, this method strengthens their ability to resist attacks. Adversarial training strengthens robustness against input manipulation, making it particularly valuable for safety-critical applications such as autonomous vehicles and medical diagnostics, where reliability is paramount.

Differential Privacy:

This technique protects sensitive data by introducing controlled noise during model training and inference. It is especially effective in federated learning and AI-as-a-Service (AIaaS) frameworks, as it helps mitigate the risk of data reconstruction and inference attacks. By carefully balancing privacy and accuracy, differential privacy ensures that individual data points remain protected without significantly compromising model performance.

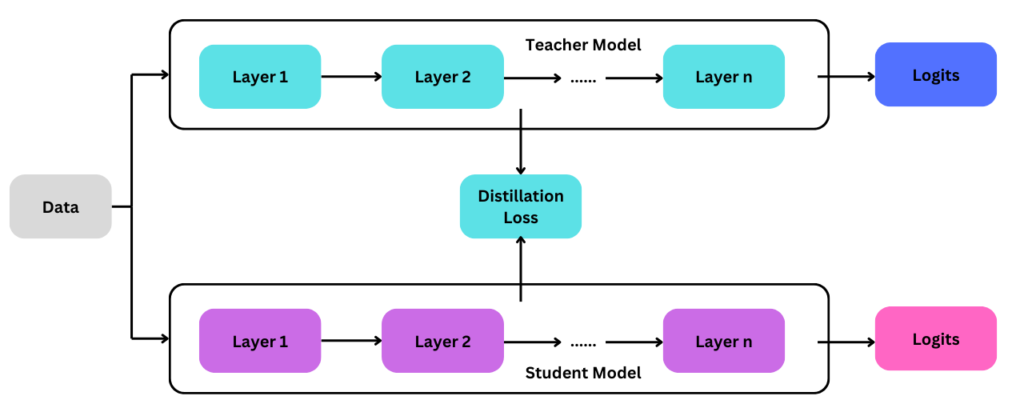

Knowledge Distillation:

Simplifying complex models through knowledge distillation reduces their susceptibility to adversarial attacks. This approach is ideal for edge devices in 6G networks, where resource constraints demand lightweight yet secure models.

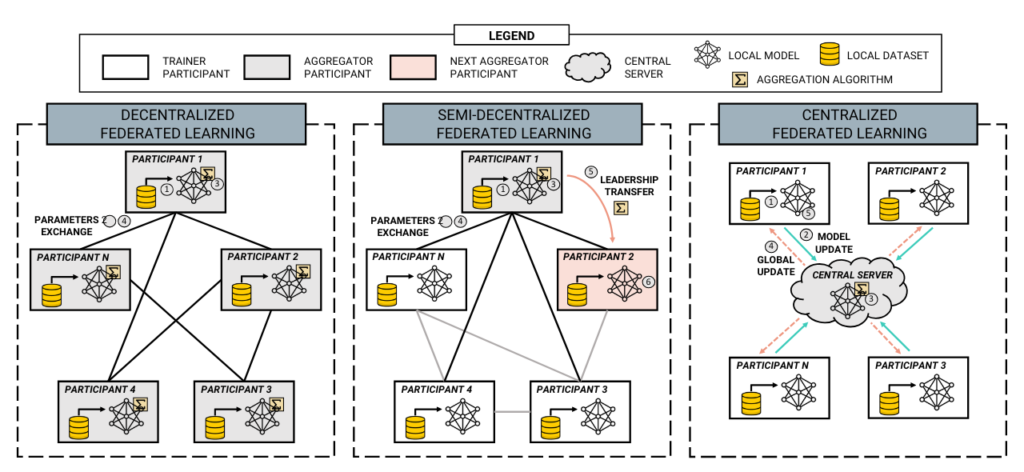

Distributed Learning Mechanisms:

Processing data locally reduces risks associated with centralized storage and data transmission. Methods such as robust aggregation and Byzantine fault tolerance enhance resilience against malicious nodes and data poisoning in federated environments.

Input Anomaly Detection:

Detecting unusual patterns in input data helps identify potential poisoning or evasion attempts. Methods such as Gaussian Mixture Models (GMMs) and deep learning-based anomaly detection are key to maintaining model integrity in real time.

Gradient Masking and Obfuscation:

Obscuring gradients during training protects model parameters from extraction and inversion attacks. When combined with query rate limiting, this approach enhances security in scenarios involving sensitive AI services.

Robust Explainable AI (XAI):

Controlled transparency in XAI prevents adversaries from exploiting model explanations while preserving trust and interpretability. Robust XAI methods are particularly valuable in applications such as intrusion detection and fraud analysis.

Blockchain and Secure Computing:

Blockchain guarantees data integrity by generating immutable records, while homomorphic encryption enables secure computations without exposing raw data. These techniques are vital in sectors such as finance and supply chain management.

Case Studies and Applications in 6G Technologies

ROBUST-6G Deliverable 3.1 applies these insights to critical 6G technologies, offering practical examples of threat mitigation:

Reconfigurable Intelligent Surfaces (RIS)

RIS enhance wireless communication by dynamically reconfiguring signal pathways. However, their integration with AI/ML introduces vulnerabilities such as spoofing and tampering. This report recommends leveraging reinforcement learning to detect anomalies and ensure signal integrity.

RF Sensing and Localization

AI-powered sensing technologies are essential for applications such as indoor navigation and activity recognition. To address adversarial attacks that disrupt sensing accuracy, this report suggests employing robust training methods and anomaly detection techniques.

AI-as-a-Service Frameworks (AIaaS)

Cloud-based AI services enable scalable deployment of AI/ML models but are vulnerable to model inversion and extraction attacks. This report highlights the importance of query rate limiting, encryption, and noise injection to safeguard these systems.

Paving the Way for a Secure 6G Future

As the ROBUST-6G project emphasizes, the path to secure and trustworthy AI/ML in 6G networks requires a proactive, multi-faceted approach. Deliverable 3.1 serves as a critical resource, guiding stakeholders to:

- Identify and prioritize vulnerabilities in AI/ML systems.

- Implement state-of-the-art prevention and mitigation techniques.

- Foster transparency and trust through explainable AI and robust governance frameworks.

By addressing these challenges head-on, the ROBUST-6G initiative strives to create a future where 6G networks are not only powerful and innovative but also secure and resilient. With these measures in place, AI and ML technologies can truly unlock the full potential of next-generation connectivity while safeguarding user trust and privacy.

For more details, visit ROBUST-6G-Deliverables.